For the second time this year, the Facebook page operated by TeleSUR English was briefly unpublished. One of TeleSUR’s formal responses during that time was an article rebuking the host of their social media account on the platform of the New York based journal, Jacobin.

In the article “Why Did Facebook Purge TeleSUR English?”, the title an allusion to the popular movie franchise about a day long cessation of legal enforcement against crime, Branko Marcetic commits a number of grave sins against his readers and contains so much irony that it’s worth coining a new literary term, “Orwellian irony”, which I briefly describe in this article but which I go into depth on here.

For one, Branko Marcetic misrepresents the situation in such a way as to leave the reader misinformed of the facts of the matter.

Secondarily, he does not cite the proper precedents that accurately contextualizes the case he describes.

Additionally, he mis-attributes intentions to multiple actors and closes with a call to action that is based on these misunderstandings.

In other words, by close examination of a narrative intended to gain public support for TeleSUR English by claiming his employer has been victimized by a conspiracy, evidence is instead provided as to why it is that Facebook should permanently unpublish TeleSUR English’s Facebook page.

Misconstruing Censorship For Community Standards

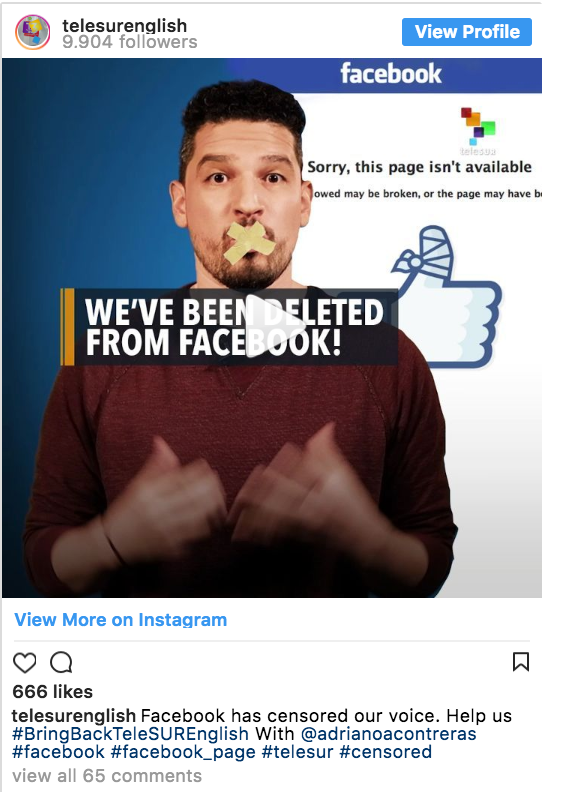

Repeating many of the talking point made in the Jacobin article, the above video shows Adriano Contreras with tape over his mouth — as if he is being prevented from speaking. But framing the issue of their unpublishing as censorship ignores several facts — the most important being the fact that the unpublishing in no way impacted the ability for TeleSUR English to publish content.

Unlike the Palmer Raids in the U.S. at the beginning of the 20th century, no equipment for the production of media was seized or destroyed and no reporters have been jailed. No news facilities were shuttered, as has been the case in Venezuela, and no reporters were attacked by state or para-state actors.

TeleSUR never lost their ability to send out mail, something that American leftists faced in the wake of the Espionage Acts, and neither was their daily email newsletter, nor their website taken down.

During the period of their brief unpublishing TeleSUR English’s web domain and other social media outlets — such as Abby Martin’s The Empire Files or The World Today with Tariq Ali — were able to be shared on Facebook’s social media platform.

If we are to accept Marcetic’s uncommon interpretation of American jurisprudence, Jacobin is similarly guilty of “censorship” should they chose not to publish ads on their website to promote a new edition of The Turner Diaries. However this is not the case as the right to free speech doesn’t mean that someone else is forced to distribute that speech.

It’s worth noting that this is not the first time that TeleSUR has misrepresented Facebook.

In an article following the leak of internal Facebook information, TeleSUR shares more false information about the company.

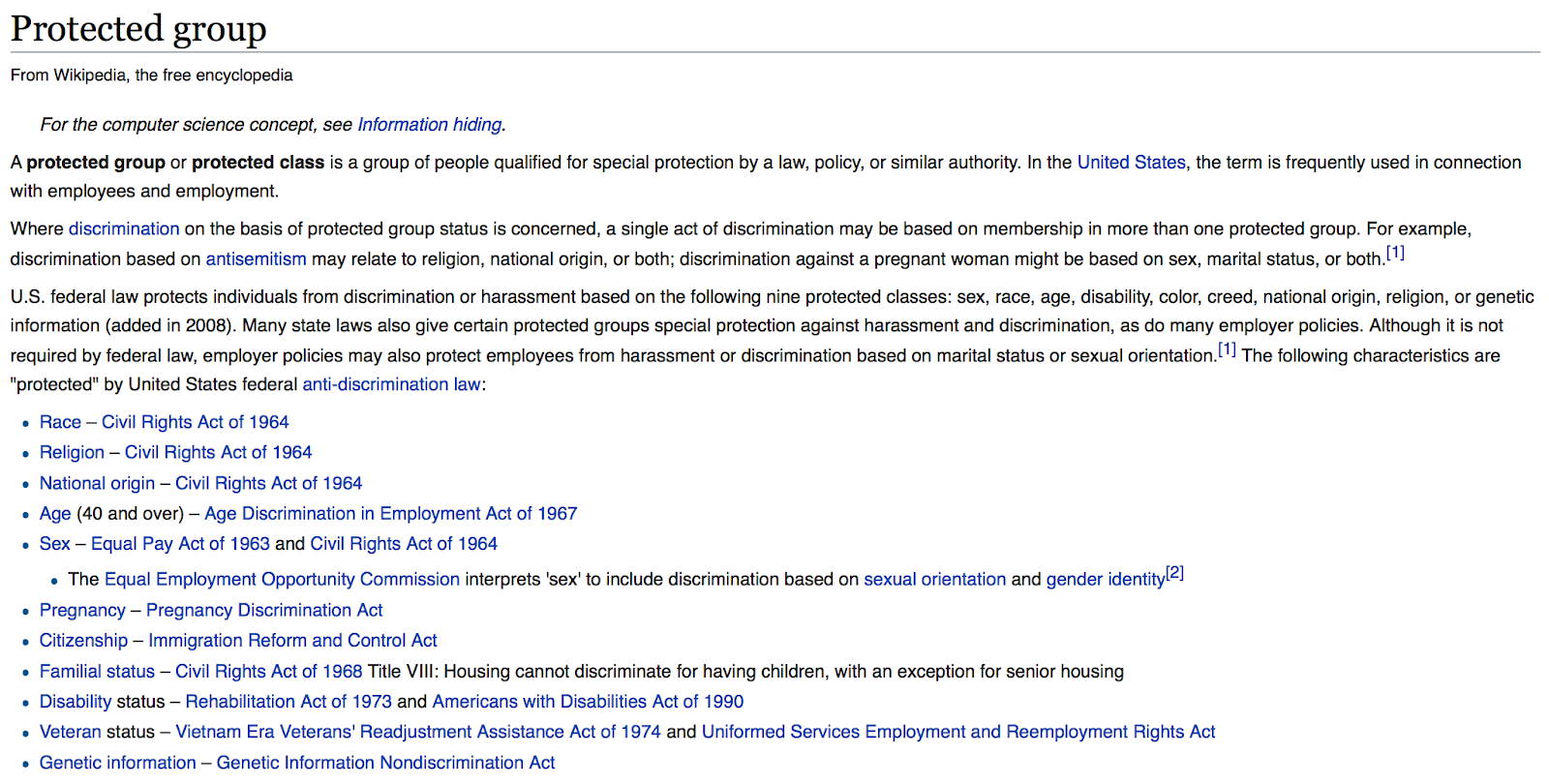

The issue at hand here is a fundamental misunderstanding of what protectedgroups are. TeleSUR English shifts away from legal terminology to instead talk about privileged groups in order to imply that Facebook is inherently racist. Could a better example have been given to those acting as Facebook censors? Yes, but as you can see from the below, protected groups don’t include drivers or children.

While it’s possible that Marcetic’s upside-down interpretation of the American legal terms and enforcement history relates to his having studied the United States in Australia, the second misreading of legal context hints at something more purposeful.

Misunderstanding Author-Publisher Relations

In the numerous defenses of TeleSUR English following their unpublishing, Frank Marcetic, Abby Martin and others ignore the fact that that prior to being granted access to the Facebook platform, users must agree to its terms and conditions.

This isn’t unique to Facebook, but goes across all social media publishing platforms as well as the news publishing industry. Letters to the editor in newspapers are vetted and sometimes edited before publication, just as articles written by paid staff members are fact-checked for quality assurance. Academic journals will send back original research for any number of reasons and similarly many Facebook groups have rules for posting that will lead to those violating them getting removed from the group.

The current director of TeleSUR English’s operations, Orlando Perez, has previously defended the rights of a publisher to set editorial guidelines that determines whether or not an article is published. He even stated that it was sensible to cease business relations with an author for attempting to make a public scandal of the incident.

Had the unpublishing of TeleSUR English been part of a large scale “purge” of leftist perspectives then we could accurately describe that event as censorship. But that didn’t happen, which then begs the question: if TeleSUR English wasn’t unpublished for it’s content, then why was it?

Paranoia and Conspiracy: What Happens When Journalists Don’t Research Their Stories

It’s at this point in their reporting on the issues that Branko Marcetic; TeleSUR reporter Abby Martin; comedian Jimmy Dore; and news commentators, such as Ben Swann and Caitlin Johnstone, all do something worse than merely misrepresenting the context of the news — they fail to examine the evidence.

Even worse than making no attempt to research why Facebook would unpublish TeleSUR English based on the statement provided to them, these “journalists” rely on the words of a TeleSUR employee who has not worked there in several months, Pablo Vivanco, to validate their view that this was censorship.

Let’s examine the evidence and see how it is that Facebook may have been correct in unpublishing TeleSUR English.

Examining Evidence Part I: Public Admission of a Bad Actor

Pablo Vivanco’s use as a source in this matter is another example of Orwellian irony — for it was his assigning or purchasing of marketing services to artificially boost follower and engagement numbers that likely lead to TeleSUR English being unpublished.

Google the name of the former Director of TeleSUR English — Pablo Vivanco — and you will learn that in contrast to his statement to RT on August 14th of this year, former TeleSUR English Director Pablo Vivanco admitted that he did this at the 2016 Building Left Media in the Digital Commons panel at Left Forum.

This fact was repeated in several interviews I’ve conducted with current and former TeleSUR English employees over the past year, who also stated that this practice was known about throughout the organization and that Vivanco’s former assistant, Cyril Mychalejko, helped direct it.

Though Pablo Vivanco implies that they stopped doing this in his official statement, had any of these “journalists” done any investigation they would have learned the real truth of the matter and come to a much different conclusion.

Examining Evidence Part II: Public Admission of a Bad Actor

Though current and former TeleSUR employees and contractors, like Branko Marcetic and Abby Martin, quote Pablo Vivanco as evidence as to why it is that Facebook’s unpublishing is censorship, a review of his personal profile provides evidence as to why it is that Facebook’s algorithm unpublished the account.

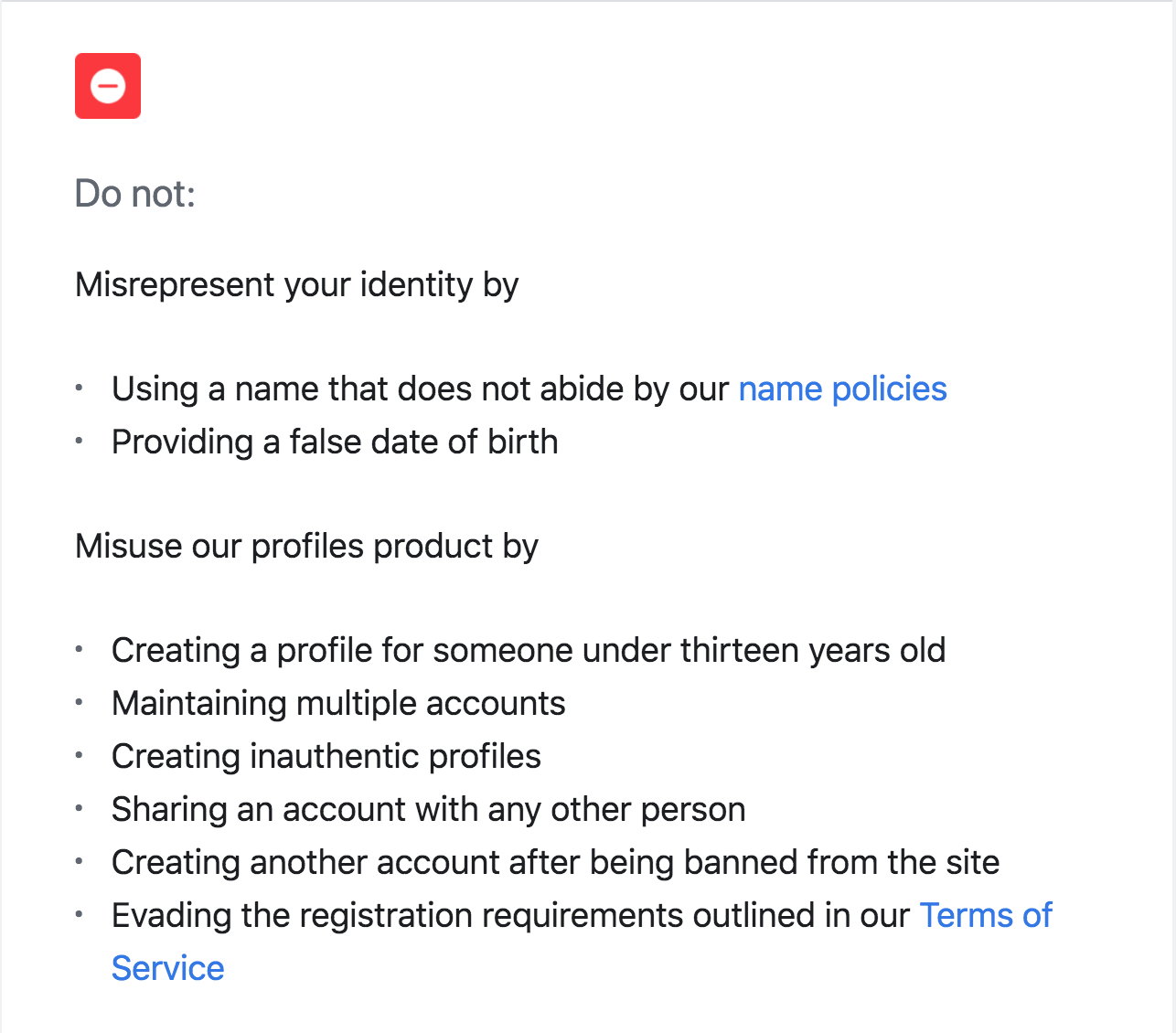

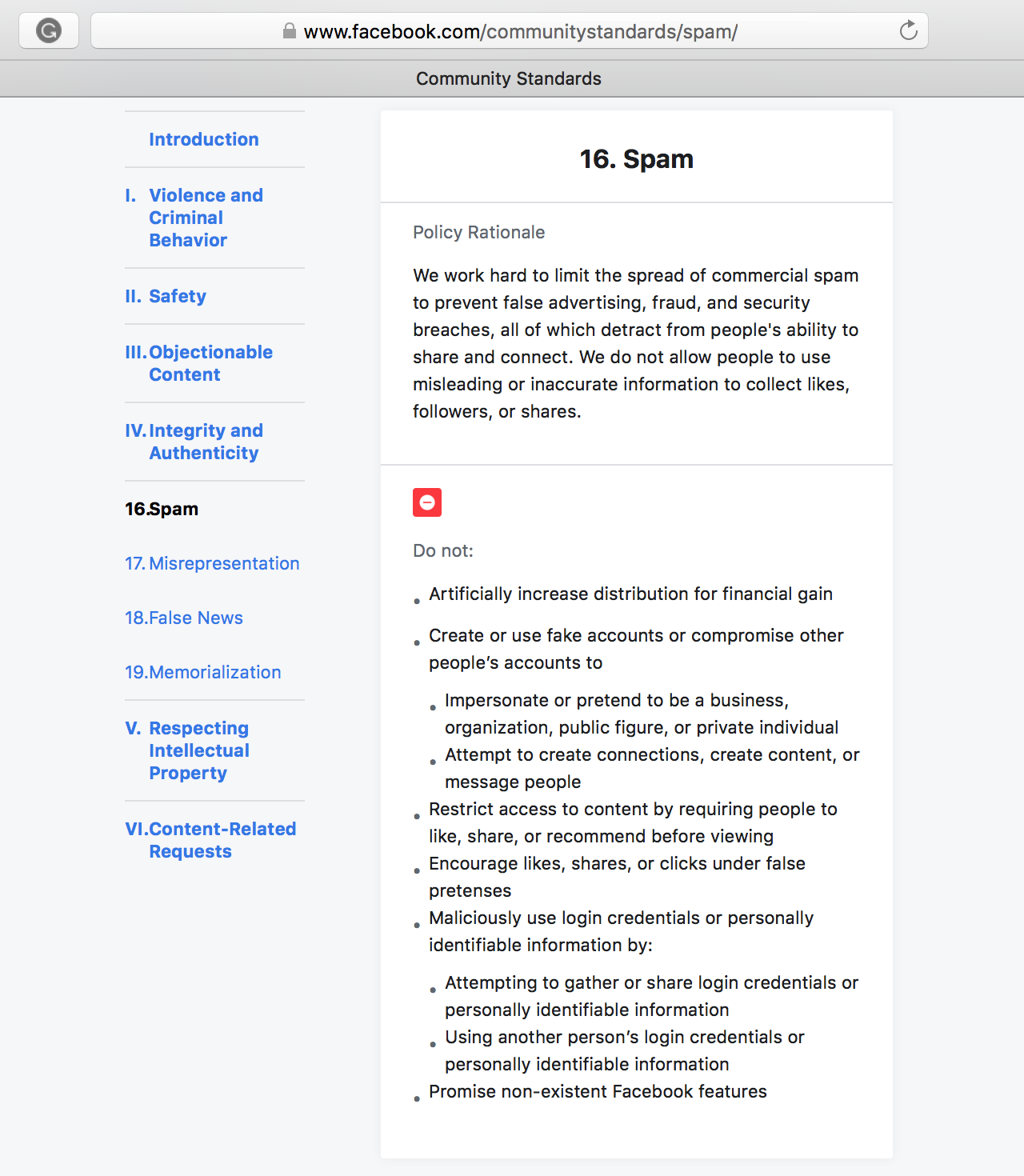

If one reviews Facebook’s Terms of Service, it immediately becomes apparent that Pablo Vivanco is not being honest when he states that there is no reason to have been unpublished.

Clearly TeleSUR English has violated Terms of Service.

Examining Evidence Part III: Private Admission of a Bad Actor

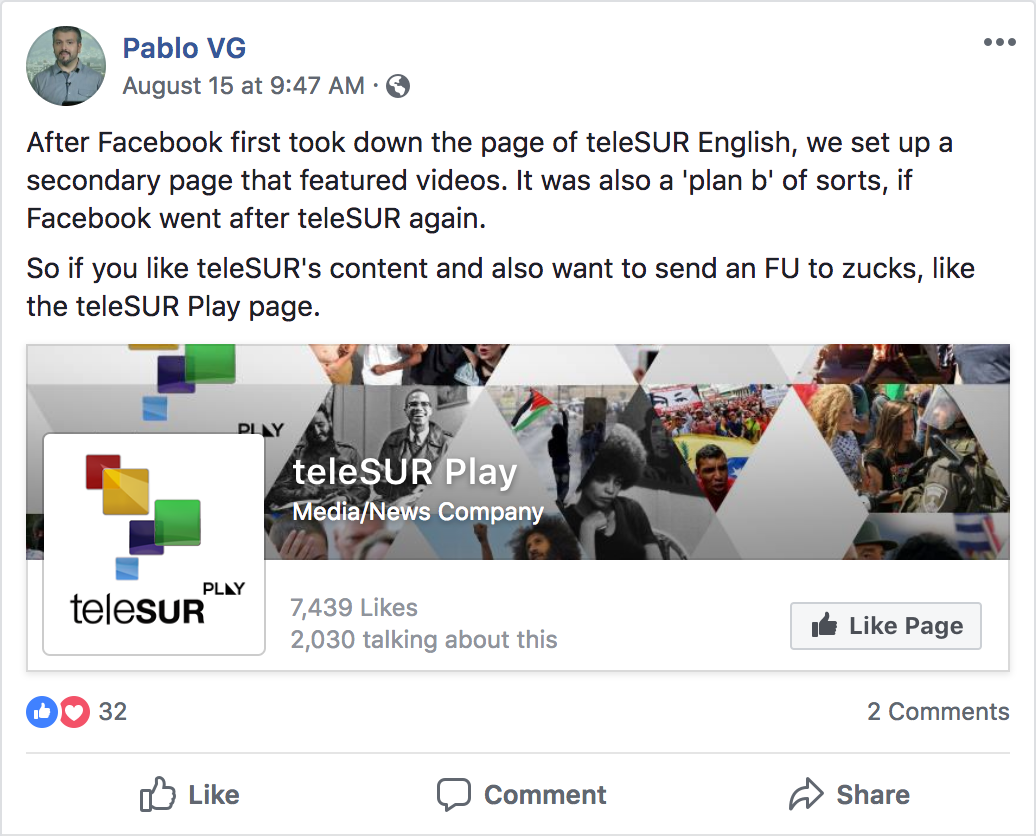

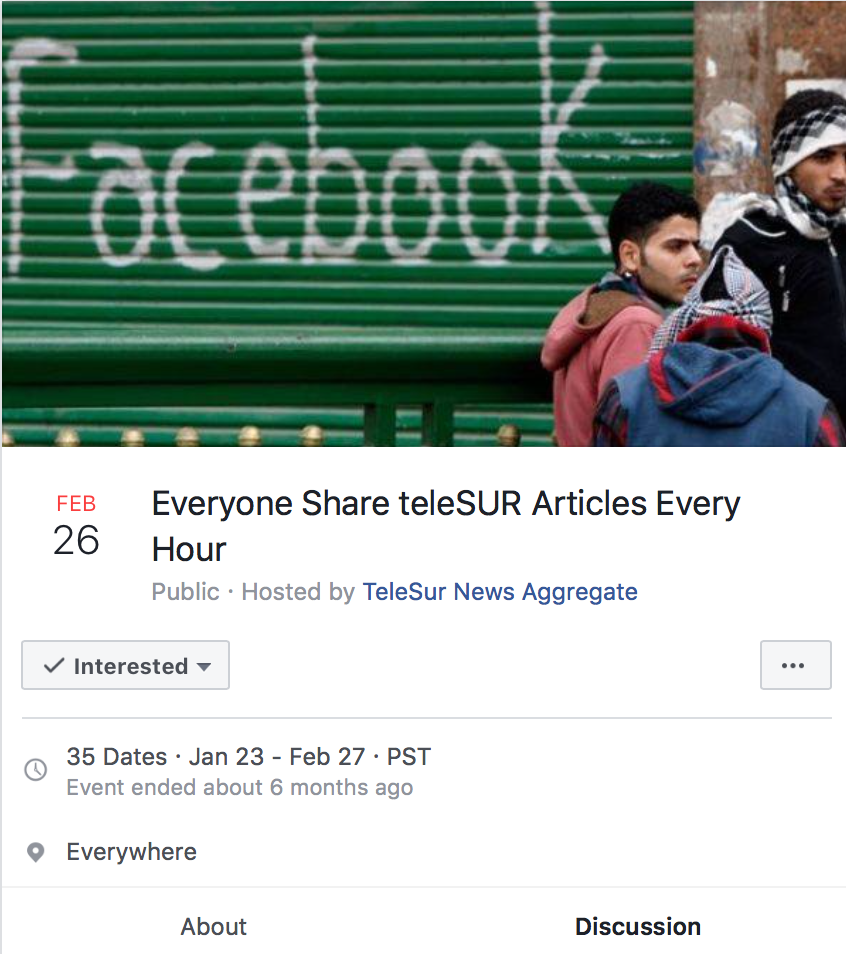

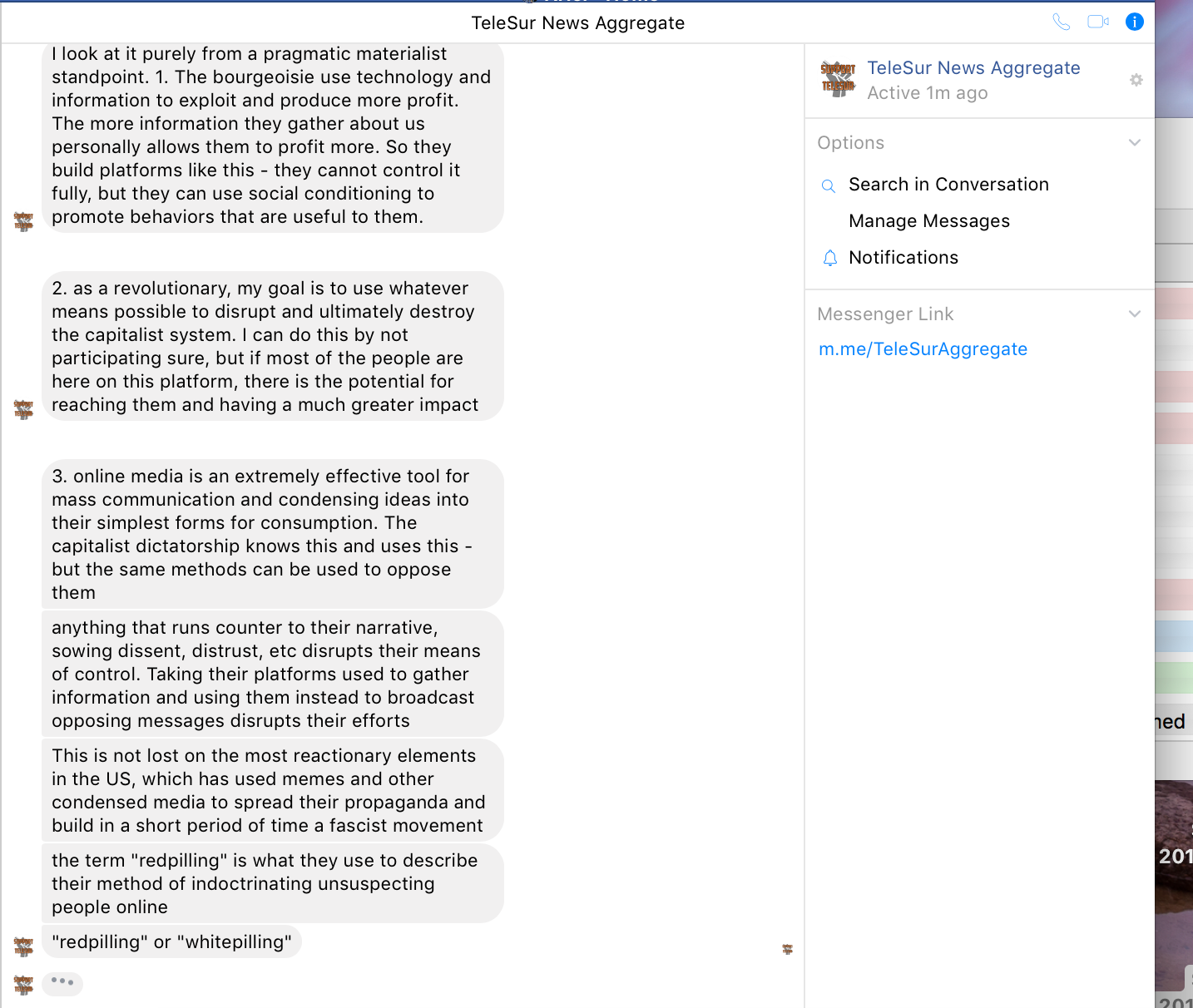

Pablo Vivanco is not, however, alone in violating or directing the violation of Facebook terms of service. In the wake of the first unpublishing, a new TeleSUR English related account was created called TeleSUR English Aggregate.

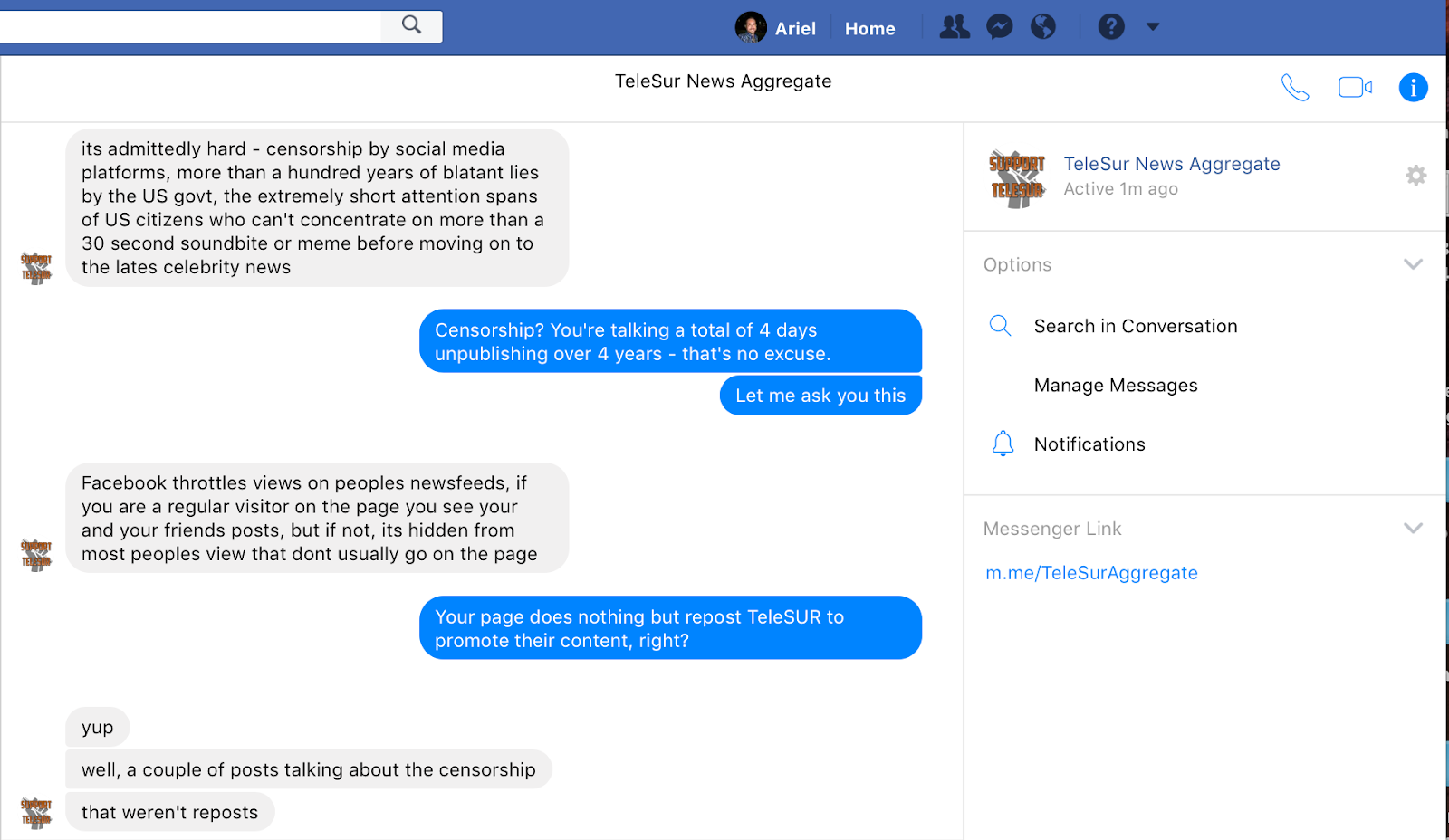

While I was not able to obtain the name of the person running the account from speaking with them, their actions speak for themselves.

During my interview with admin of the TeleSUR Aggregate account, he openly admitted that the sole purpose of the account was to promote their content.

When I asked him why he did this, he gave me a pretty clear answer why he was doing this: he wanted to disrupt the operation of Facebook.

Examining Evidence Part IV: What An Automated Bot Network Looks Like

It’s not just the purchasing of likes, which helped get TeleSUR English to its roughly half a million likes that lead to it’s unpublishing, nor was it solely the TeleSUR Play and TeleSUR Aggregate accounts — whose sole purpose is to repost TeleSUR English content.

The false profiles obtained by Pablo Vivanco have done far more than just “liked” TeleSUR English’s profile — they also continue to artificially boost it’s engagement numbers.

If you go look through the public comments, likes, and reposts of TeleSUR English’s content — you will uncover hundreds of Facebook accounts that rarely have photos, and often have only a small number of friends, but are characterized by a large amount of user activity which consists entirely of re-posting news.

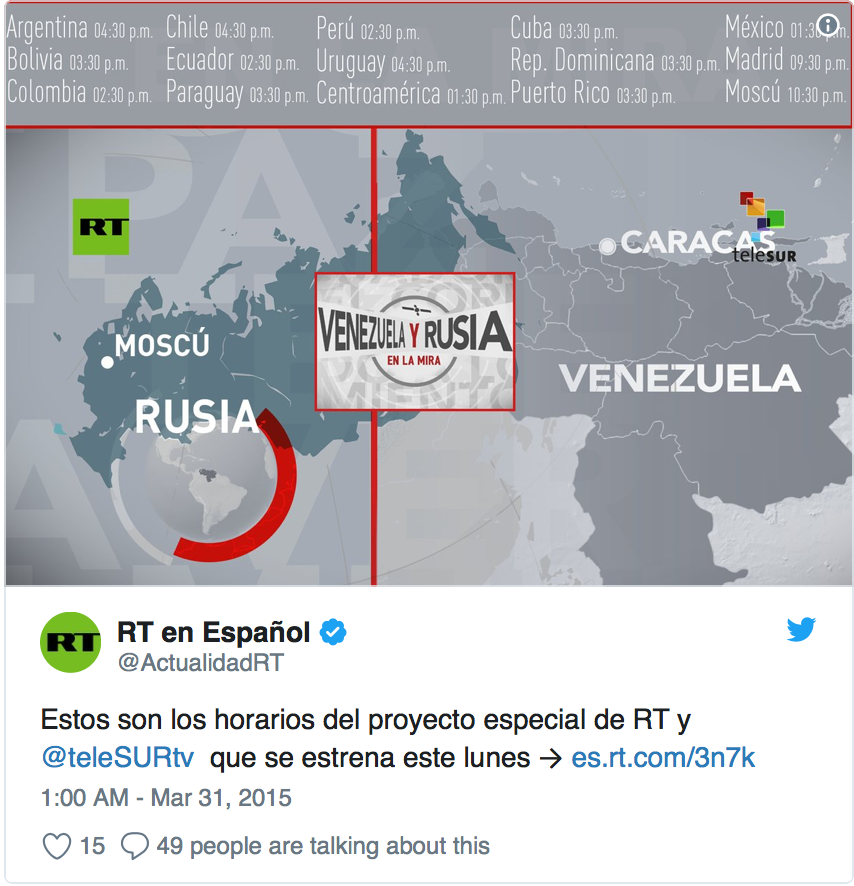

Unsurprisingly, given the media agreement between the Russian and Venezuelan state media, they also re-post content from RT and Sputnik.

Suggesting a mix of accounts that are directly controlled and others that are operated by 3rd parties, a number of them also post on behalf of various companies — such as GreenMedInfo.com.

Examining Evidence Part V: What A Human Re-Share Network Looks Like

In order to artificially inflate the reach of TeleSUR English, bad actors on Facebook aren’t limited to automated accounts but include coordinated behaviors by a large number of individuals.

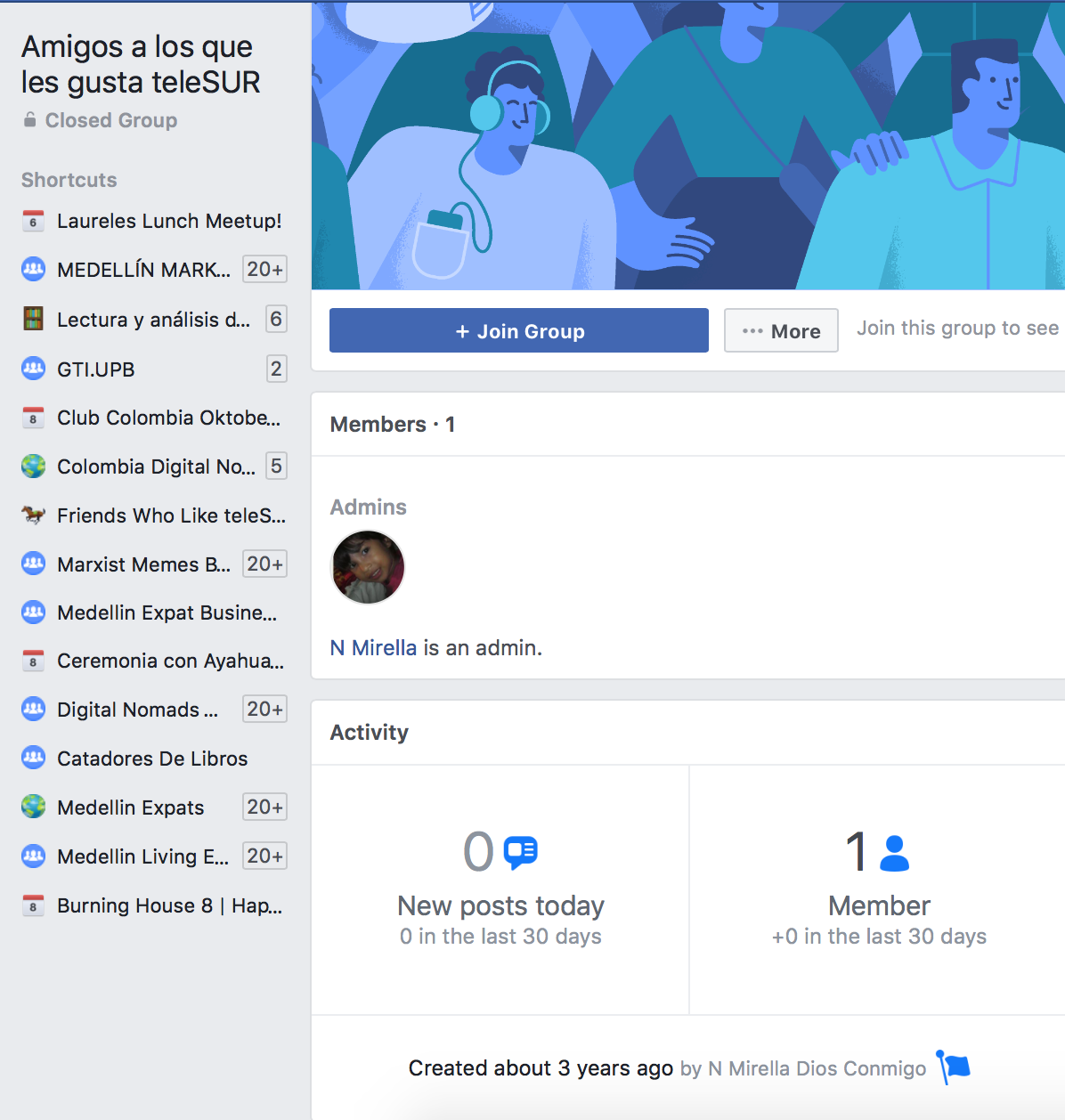

There are over 30 “Friends who like TeleSUR English” Facebook groups. What their function is given the above evidence, I can’t clearly say.

I messaged all the administrators of these groups in February of 2018 and only recieved two responses, one from someone in Africa and one from someone in India. Both denied being an admin, even though they were clearly listed, and neither would respond to my questions

Some of these groups are moderated by employees of the Venezuelan government, like the one above. Others by Cubans. One is moderated by Vlaudin Vega, an individual formerly on the U.S. State Department’s terrorist watch list for association with the FARC. The vast majority of these have not been active in years.

What I can say that they do is to re-publish content into public groups, in order to make the content seem more valuable in Facebook’s algorithm.

The Real Reason TeleSUR English Was Briefly Unpublished AND Why Leftist Media Saw a Big Drop in Google Traffic

Just as algorithms can help prevent forest fires, help serial killers get caught, or suggest your next purchase based on your past ones, algorithms are also able to identify a large number of accounts that are spamming the system to drive traffic and increase page rankings.

While this type of marketing effort can lead to big increases in follower numbers, such behavior is prohibited by all the social media platforms as it degrades the user experience.

The reason that many leftist media outlets saw a drop in Google ranking and traffic being directed to their websites and why they posts have been deprioritized in Facebook feeds has nothing to do with selective targeting of companies or political viewpoints and everything to do with Facebook and Google holistically discouraging black hat marketing practices.

In their quest to create greater domain authority, media organizations all over the political spectrum as well as businesses bought followers from companies or created their own portfolios of sock-puppet accounts. They joined back-linking networks and compensated others to engage in practices designed to amplify the appearance of trustworthiness according to what the algorithms searched for and valued.

And then they got penalized for it and for other reasons.

Lest this seem overly abstract, let me give some examples that feature links to TeleSUR English content.

Examining Evidence Part VI: Fake Backlinking

By name alone Los Angeles Post sounds like it could be a credible outlet, but the small number of followers and the lack of postings over the past 8 months suggests otherwise.

Viewing their website you’ll learn that there are similarly few current news articles listed on their landing page, which also hints at this being merely a means for marketing companies to monetize companies desires for backlinks.

Looking through their back pages, however, you’ll see a large number of links going to TeleSUR English, CounterPunch, MintPressNews and other “alternative news” websites.

Another page which does this is called Russia is not the Enemy. Unlike LA Post, they do not pretend to be a news site — but are simply a news aggregate website that posts RT, TeleSUR, CounterPunch, etc.

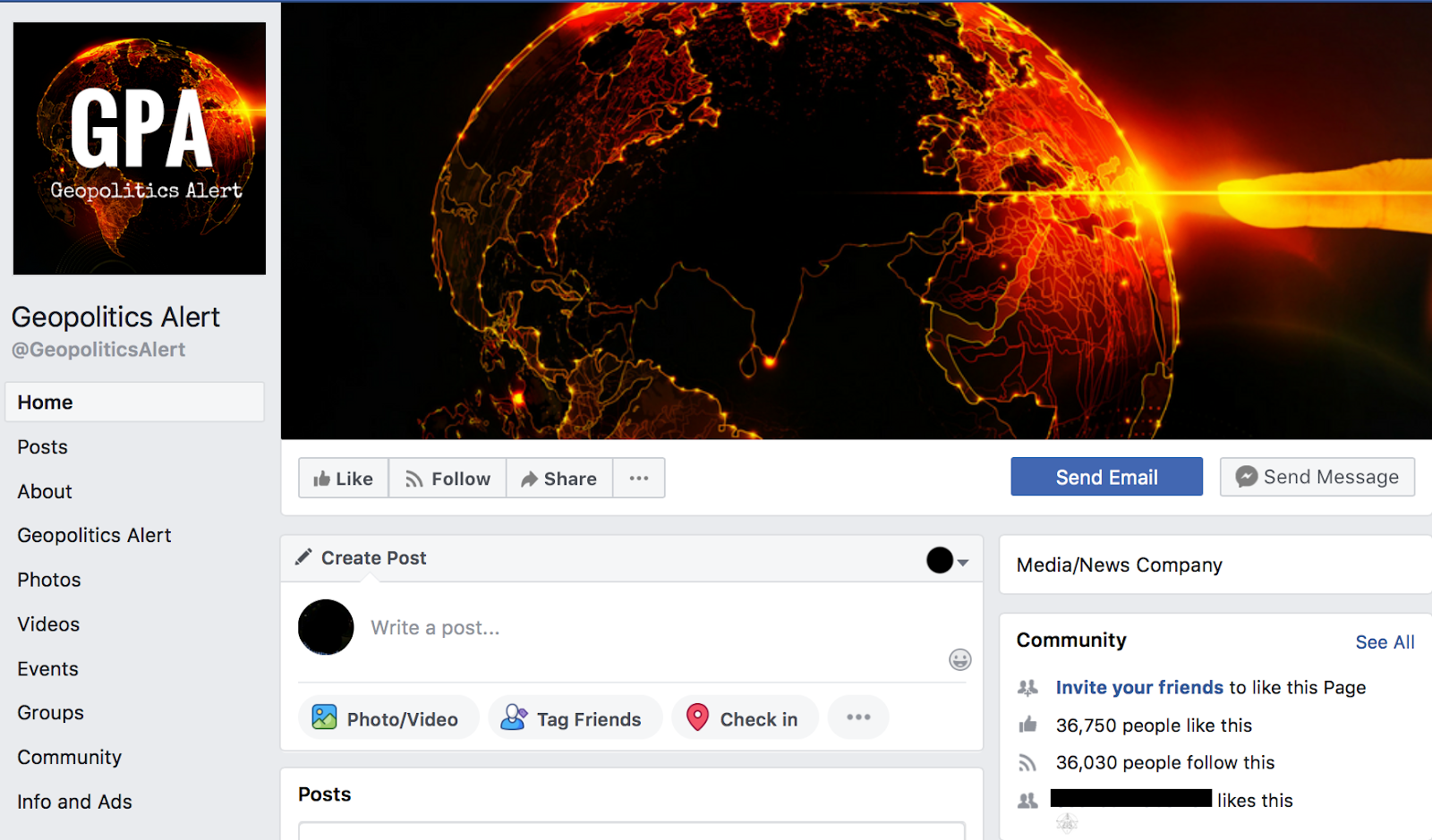

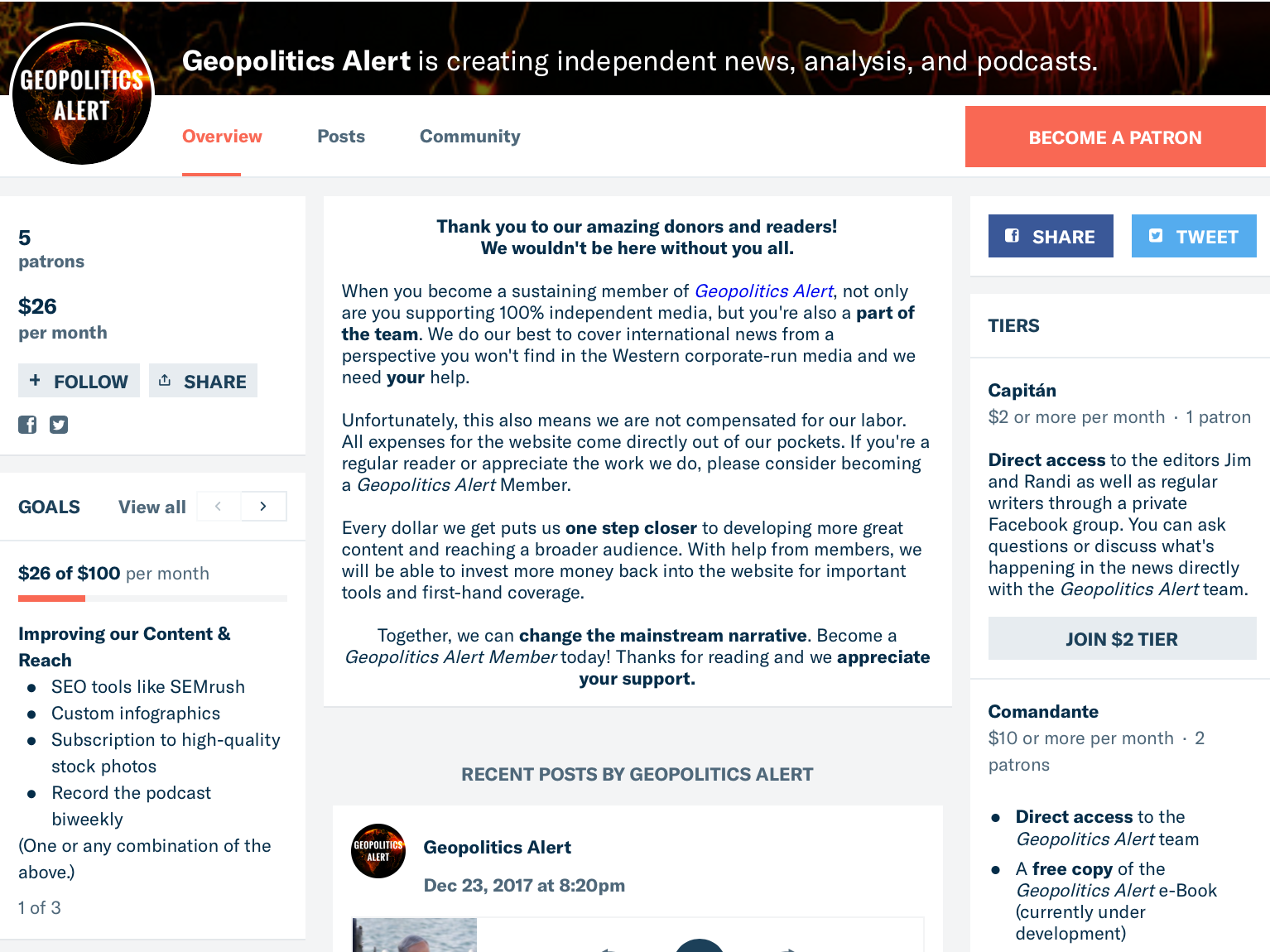

Another example of such a backlinking scheme, but with actual operators, is Geopolitics Alert. Their Facebook page lists 36,700+ people liking it, however the journalistic pair that makes up this “Media/News Company” has only five Patrons and under 50 listens to their SoundCloud Account.

I emailed co-founder Jim Carey to ask him if he has accepted marketing services or money from TeleSUR, which is sensible as almost a quarter of the content on their website is directly from TeleSUR English, and he denied this.

When asked the same question as to his relationship with other state or private media organizations, Carey refused to answer my questions.

Websites like these, which claim to be funded by donations given the paucity of income they get from that means, have links to thousands of new backlinks to leftist news sites such as TeleSUR English and CounterPunch.

Not Censorship, But Technics

Just as there is a difference between censorship and a Facebook page being unpublished due to non-adherence to terms and conditions, so too is there a difference between a conspiracy to silence leftist media and the effects of algorithmic updates that seek to holistically counter the actions of bad agents. Facebook has had to delete millions of such accounts.

The notion that those with capital to pay the fees for partnership with such networks shouldn’t be given an advantage is not an “anti-democratic” decision, but one that encourages democracy. This is why Facebook and Google have changed their ranking system, and since this is clearly stated on their website and in the press Branko Marcetic, Abby Martin and others statements is not an act of courageous truth-telling but an attempt to deflect the truth that groups such as DFR Labs are trying to uncover. Rather than examining what it is such groups do to inform their readers, they hint at global conspiracies.

Doing so they ignore the fact that to operationalize the functioning of their internet platforms in such a way that it is more open, democratic, enjoyed by their users and not a source for marketing spam or disinformation campaigns from bad actors working on behalf of foreign governments — algorithms need constant refinement.

Is This What Democracy Looks Like?: The Evidence TeleSUR English Won’t Address

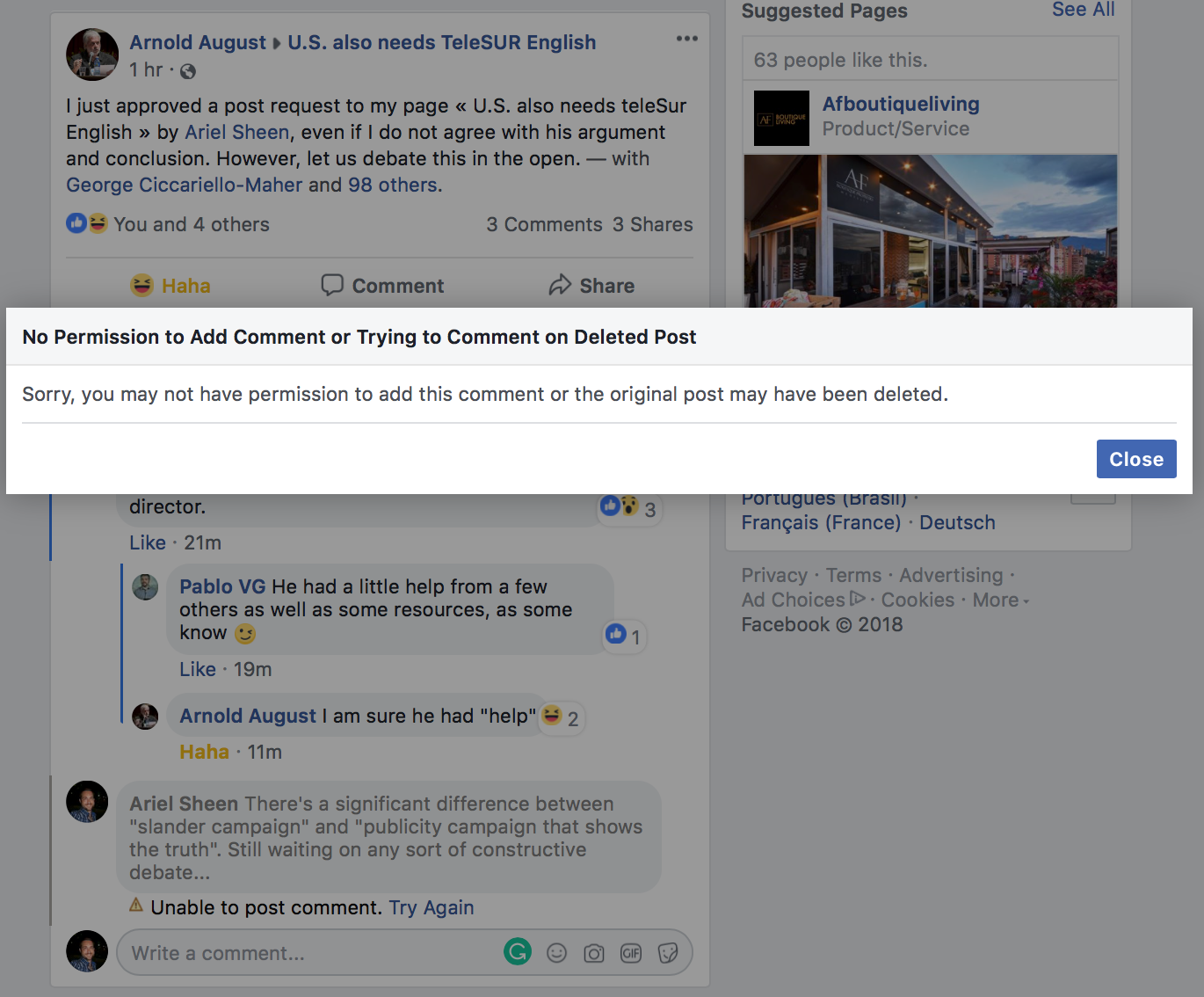

I posted an earlier version of this article in the only Friends of TeleSUR English group that had any recent postings. Moderated by Arnold August, a journalist and political scientist with a specialization on Cuba, I was hoping to better understand the perspective of the “Friends who like TeleSUR English” given the above evidence. Given that I was previously blocked on Twitter and Facebook by Vice Director of TeleSUR Orlando Perez and former Director of TeleSUR English Pablo Vivanco after asking them to explain as well, I can’t say that I was shocked by what transpired next.

First it was suggested that I was committing slander. Then it was implied that I had received help from “unnamed sources”. Then Nicolaj Leonardo — a writer for RT and TeleSUR English, posted a photoshopped photo of me with a knife, and then I was banned from the group.

This is not what democracy looks like.

To be clear, I do not now nor have I ever worked or consulted for Facebook, the Atlantic Council, the Digital Forensics Research Lab or any other of organization and the only “help” that I’ve recieved in my research is in the form of information given to me by current and former TeleSUR English employees.

I am a graduate of NYU’s Masters program in the Experimental Humanities, a Doctoral candidate at Universidad Pontificia Bolivariana in their program for Innovation and Technology Management that has also worked in digital marketing for several years and the project leader of a team of researchers answering the call for research by Social Science One to depict the relationship between social media, democracy and elections.